On December 18, 2025, G3mini announced in a tweet on its official account on a well-known social media platform that Gemini 3 Flash was officially launched! As soon as this news came out, it was like throwing a giant rock onto a calm lake, instantly stirring up thousands of waves. Who would have thought that Google would directly expand its strategy this time, positioning Gemini 3 Flash directly against OpenAI and Anthropic's flagship models. This ambition is not small! Is Google really going to use it to create a bloody storm in the AI market?

Performance upgrade, double breakthrough in speed and cost

The official launch of Gemini 3 Flash comes with great sincerity. The official claim is that it is three times faster than the 2.5 Pro, but its price has been reduced to a quarter of the 3 Pro, and its performance has not decreased but increased. This is like an athlete who not only runs faster, but also saves energy. Who wouldn't be tempted by this? Google itself proudly claims that this is "cutting-edge intelligence born for speed", which can be simply translated as fast, cheap, and smart.

However, in the actual experience, there is still a certain gap between the performance of Gemini 3 Flash and the Pro version. When using it, it can create a sense of discrepancy between the product and the advertised one, just like when you eagerly anticipate buying a high-end item, only to find that it is slightly different from what was advertised. However, this does not affect Google's precise control over the timing of its release. It followed closely behind Gemini 3 Pro and Deep Think to launch Flash, completely giving competitors no chance to breathe, making people increasingly look forward to what kind of counterattack gift Sam Altman will come up with.

Three models are deployed together to meet diverse needs

Starting today, you can use three different positioning models in the Gemini product line.

Gemini 3 Flash (Fast): Speed Responsibility

Featuring the word 'fast', it is particularly suitable for dialogue scenes that do not require long chain thinking and pursue efficiency. Just like when you are in a hurry and need a simple answer quickly, it can respond quickly and save you a lot of time.

2. Gemini 3 Flash (Thinking): an expert in reasoning

Equipped with lightweight model reasoning ability, it can simulate human thinking process to improve accuracy when facing complex problems. Like a clever little detective, he won't give up easily when faced with difficulties, but will carefully analyze and find the right answer.

Gemini 3 Pro: Performance King

It remains the top choice for handling extremely difficult tasks and is the ceiling of performance. If you have a very complex and difficult task, it's definitely the right one to hand it over. It's like a superhero, able to easily tackle various challenges.

Super Pro running score, not to be underestimated in strength

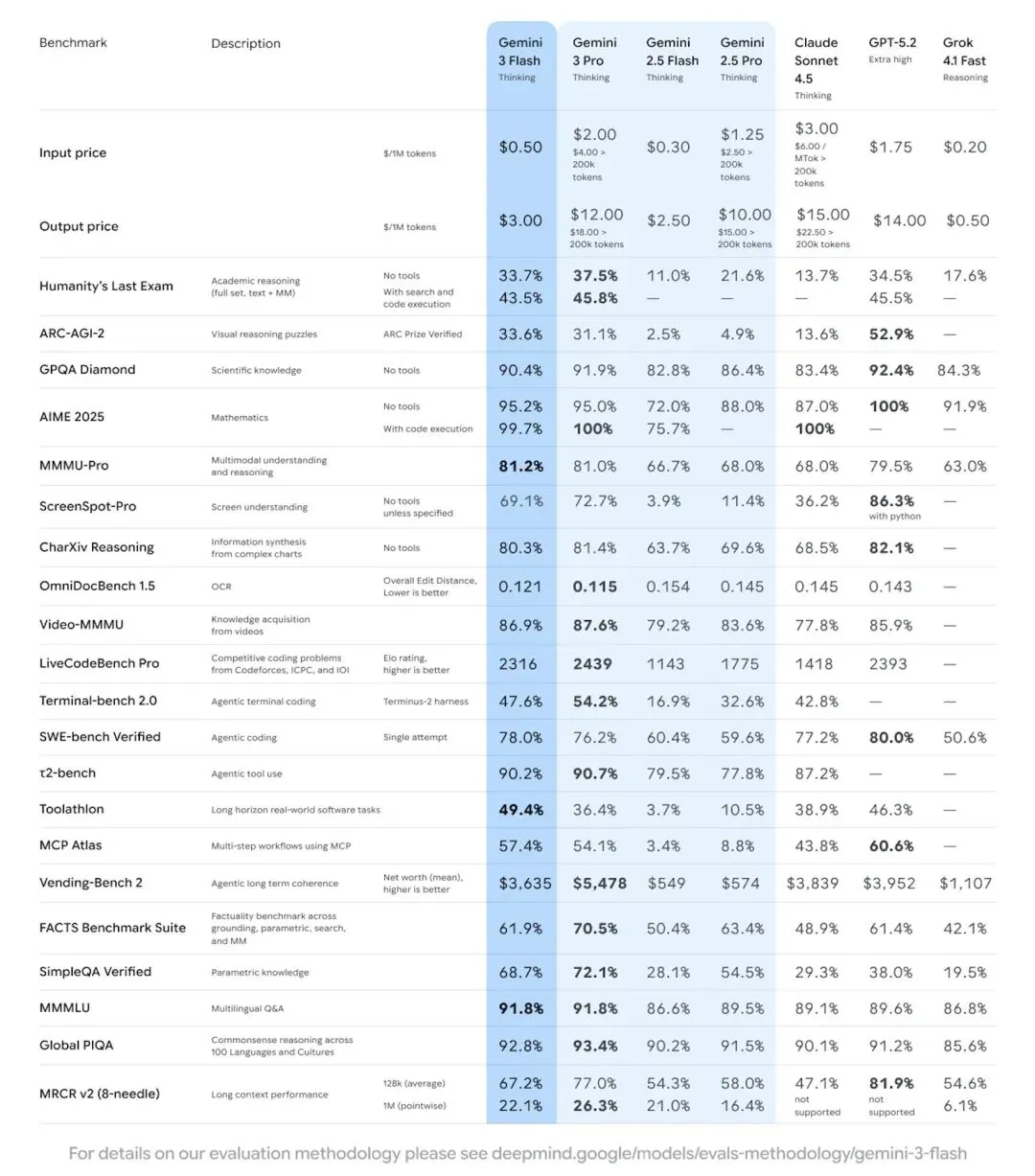

The benchmark test results were quite surprising, as Gemini 3 Flash retained Pro level inference capabilities and directly reduced latency and cost to Flash level. Specifically, in GPQA Diamond, a doctoral level reasoning test, it can achieve a score of 90.4%, which is comparable to larger cutting-edge models.

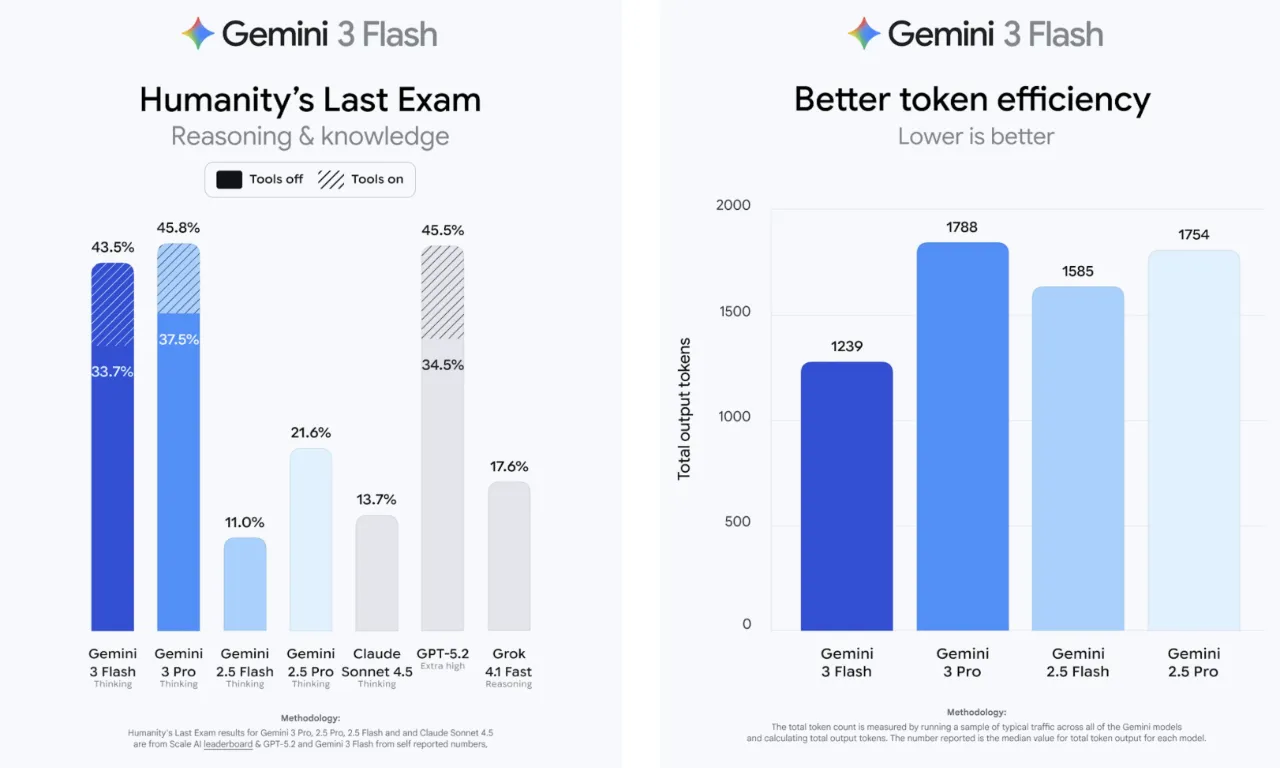

In Humanity's Last Exam, which is extremely difficult, a score of 33.7% can be obtained without the need for tools. Even more exaggerated is that in the MMMU Pro test, it directly won 81.2%, reaching the most advanced level in the industry, which is comparable to the performance of its own 3 Pro. This is simply a "reversal of the heavens"!

Previously, everyone thought that it was difficult to balance the three dimensions of "quality cost speed", either fast but not smart, or smart but expensive. Now Google has proven with Gemini 3 Flash that as long as the engineering capabilities are optimized properly, Hexagonal Warriors can really exist.

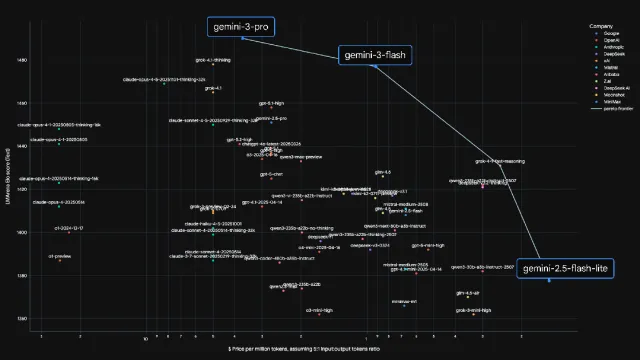

The data shows that its token consumption is 30% less than 2.5 Pro, the speed is three times faster, and the price is even lower than the floor price of inputting 0.5 USD/million Token and outputting 3 USD/million Token. This price is simply too expensive. It seems that the new AI models nowadays not only need to consider parameters, but also cost-effectiveness.

Strong adaptability, a blessing for developers

Gemini 3 Flash is still an inferential model that can flexibly adjust the "thinking" time according to the complexity of the task. Even at the lowest 'thinking level', its performance often exceeds the 'high thinking level' of previous models. This adaptive ability is particularly valuable in practical applications, as there will be no waste of resources like using a cow knife to kill a chicken.

For developers, the emergence of Gemini 3 Flash is simply a timely rain. They used to have to struggle between speed and intelligence, but now they finally don't have to worry about it anymore.

According to benchmark tests, it achieved a high score of 78% in SWE bench Verified encoding tests, not only outperforming the 2.5 series, but even surpassing its own 3 Pro. Moreover, its multimodal capability is also excellent, able to process visual, audio and other inputs faster, linking "see, hear, understand" into a relatively smooth chain, especially suitable for interactive scenarios that require immediate feedback.

For example, analyzing golf swing videos and providing improvement suggestions, identifying sketches and predicting intentions, and overlaying code execution capabilities, it can not only understand image content, but also process and operate images with toolchain support.

There are gaps in practical applications, and shortcomings still need improvement

Google has officially showcased many interesting application scenarios for Gemini 3 Flash.

For example, in "pitching puzzle" games, it can perform real-time auxiliary reasoning, providing feasible or even better solutions; In the interactive UI design process, it is possible to generate loading animations and compare A/B solutions with rapid iteration; Provide an image that can also perform basic recognition and generate interactive annotations based on context. These demos emphasize real-time performance, iteration efficiency, and operability.

However, when I personally used Gemini 3 Flash to run case studies, I found that compared to Gemini 3 Pro, its response speed is indeed extremely fast, but the effect is relatively average, clearly sacrificing the quality of visual and interactive details.

Taking the replica macOS interface as an example, its performance is a bit lackluster, with obvious missing icons in the bottom Dock bar and less refined interaction details compared to Gemini 3 Pro.

In the design task of "Retro Object Style Camera Application", the gap is even more obvious, and the generated single page application still has a significant gap in visual presentation compared to the expected goal.

When creating the "Planet Signal" webpage, although there are some interactive details, the overall page effect is still slightly rough and lacks a delicate sense of design.

Embedded in the family bucket, Google's strategy

Google not only officially launched Gemini 3 Flash this time, but also integrated it into the AI search mode (currently unavailable in China), gradually opening it up to the world. Compared with previous versions, it can better understand the details of complex problems, capture real-time information and useful links from the entire network, and output visually clearer and more organized comprehensive answers.

At the same time, Gemini 3 Flash is becoming the default base for Google's "family bucket". The Gemini application, search AI mode, Vertex AI, Google AI Studio, Antigravity, and Gemini CLI are all newly launched. Global users can experience it for free, while enterprise users can access it through Vertex AI and Gemini Enterprise.

Finally, let me emphasize the price again. Input $0.5 per million tokens, output $3 per million tokens, and audio input $1 per million tokens. The trial price is less than a quarter of the Gemini 3 Pro. If context caching is used, the cost of duplicate tokens can be saved by an additional 90%; Using Batch API for asynchronous processing can save an additional 50% while also increasing the call limit. For synchronous or near real-time scenarios, paid API users can obtain high usage rates for production environments.

It is worth mentioning that Google's development in the AI field is also closely related to its accumulation in the search engine field. Just as search engines need to constantly optimize algorithms to improve the quality of search results, Google also has its own unique technology and experience in training and optimizing AI models.

Giant's internal competition benefits users

When the promotional slogan packages Flash as "almost Pro level", users will naturally use Pro standards to inspect the goods. Once encountering scenarios with complex reasoning, long link tasks, and higher stability requirements, the shortcomings of Flash will become more apparent. However, Google's biggest trump card is still traffic. Search YouTube、Gmail、Google Maps, Billions of users use these products every day. By embedding 3 Flash into these high-frequency applications, users can be seamlessly and naturally surrounded by Google AI services in their most familiar scenarios. This kind of gameplay cannot be learned by OpenAI and Anthropic.

On the one hand, Google is wealthy and has the capital to burn money and compete in the market; On the other hand, Google's accumulation in infrastructure and engineering optimization such as TPU, data centers, and distributed training can indeed help it reduce costs. It provides ToB API services while directly integrating AI capabilities into its own products, covering a large number of ordinary users. When users get used to using AI mode in search and conversations in Gemini applications, they naturally become dependent on Google's AI. This is Google's true strategy.

Of course, this kind of internal competition between giants is cruel for the industry, but it is definitely a good thing for users. Models are stronger, prices are lower, developers can innovate at low cost, and ordinary people can enjoy smarter services. These are probably the few deterministic dividends in this AI arms race. Let's look forward to more surprises in the future of the AI field together!