The development of Large Language Modeling (LLM) agents has attracted much attention. Among them, how to effectively store and utilize experience has become a key challenge in the development process of LLM intelligent agents. Just like us humans, accumulating experience is essential for continuous growth and progress, and intelligent agents are no exception. If we cannot make good use of experience, how can we excel in completing tasks in complex and ever-changing environments?

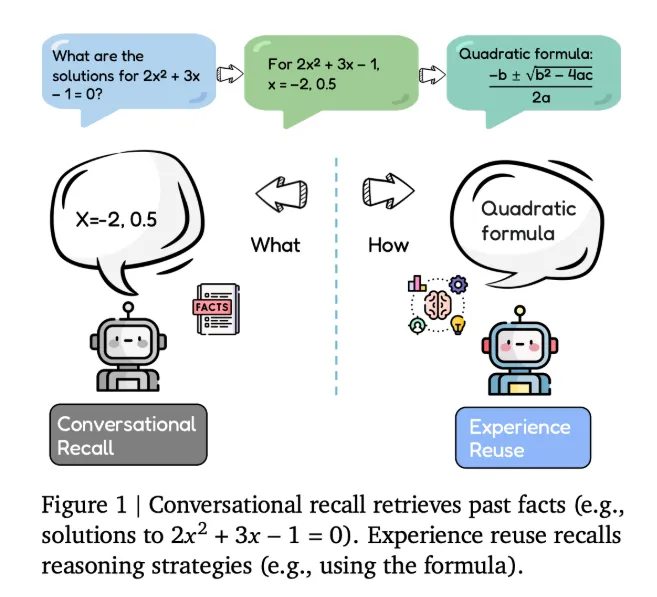

Traditional intelligent agents mainly rely on dialogue recall to store information. They will store conversation history, tool usage records, and document retrieval results, hoping to reorganize this information for future queries. But in this way, it's just a passive buffering of information, and there's no way to actively modify the agent's strategy for handling related tasks. It's like a person who just writes down what they have done without thinking and summarizing. The next time they encounter a similar situation, they still don't know how to better cope.

Evo Memory: Innovation of Flow Benchmarking and Intelligent Agent Framework

Recently, a research team from the University of Illinois at Urbana Champaign and Google DeepMind officially launched Evo Memory. This is a streaming benchmark and intelligent agent framework aimed at addressing the shortcomings of current technology. Evo Memory is not simple, it not only evaluates the learning ability of agents during testing, but also pays special attention to self evolving memory. It challenges intelligent agents to see if they can accumulate and reuse strategies from continuous task streams, rather than relying solely on static dialogue records.

The research team formalized the memory enhancing agent into a tuple consisting of four parts (F, U, R, C). Here, F is the basic model, R is the retrieval module, C is the context construction, and U is writing new experience and evolving memory after each step. Evo Memory evaluates the performance of agents in various environments by reconstructing the dataset into an ordered task flow.

To establish a baseline, the research team also defined the ExpRAG model. This model will convert each interaction into structured experiential text. In the new task, the agent retrieves similar experiences and combines them with the current input for processing.

ReMem framework: turning memory into dynamically editable objects

Here is a non essential description related to the topic: Imagine an intelligent agent being like a smart student, constantly learning new knowledge while also being able to flexibly apply what has been learned before. How amazing!

The emergence of the ReMem framework has brought a new dawn to the development of LLM agents. It introduces a control loop of "thinking action memory refinement", allowing the agent to actively retrieve, prune, and reorganize its memory during the reasoning process. In this way, memory is no longer a dead thing, but an explicit object that can be dynamically edited during reasoning.

Experimental results: Significant improvement in intelligent agent performance

After adjusting the original paragraph order, let's first talk about the results section. The research results show that agents using self evolving memories such as ReMem and ExpRAG have significantly improved performance during testing. They can complete tasks in fewer steps, with higher success rates and accuracy. This is like a student who originally had average grades, but after mastering better learning methods, their grades skyrocketed.

Put the previous content about the comparison of advantages between traditional intelligent agents and Evo Memory here. Relatively speaking, Evo Memory emphasizes experience reuse, treating each interaction as an experience containing input, output, and feedback, evaluating whether the agent can retrieve these experiences in subsequent tasks and transform them into reusable strategies. Compared to the passive memory method of traditional intelligent agents, the advantages are simply too obvious.

Future development: pointing out new directions for the development of LLM intelligent agents

This research achievement undoubtedly provides a new direction for the future development of LLM intelligent agents. With the continuous upgrading of technology to new heights, we have reason to believe that future LLM agents will be more intelligent, efficient, and able to play important roles in more fields. The breakthrough of Google DeepMind is like lighting a beacon in the dark, leading LLM intelligent agents towards a brighter future.

In short, the editor believes that the Evo Memory benchmark and ReMem framework launched by Google DeepMind on December 1, 2025, have brought new possibilities for the experience reuse of LLM agents. Let's wait and see their brilliant performance in the future!